AI's Reality Check: Why Gemini 3 and GPT-5 Flunked the Physics Test

Top models like Gemini 3 and GPT-5 just faced the 'CritPt' physics benchmark, and the results were humbling. Here is what it means for the future of reasoning.

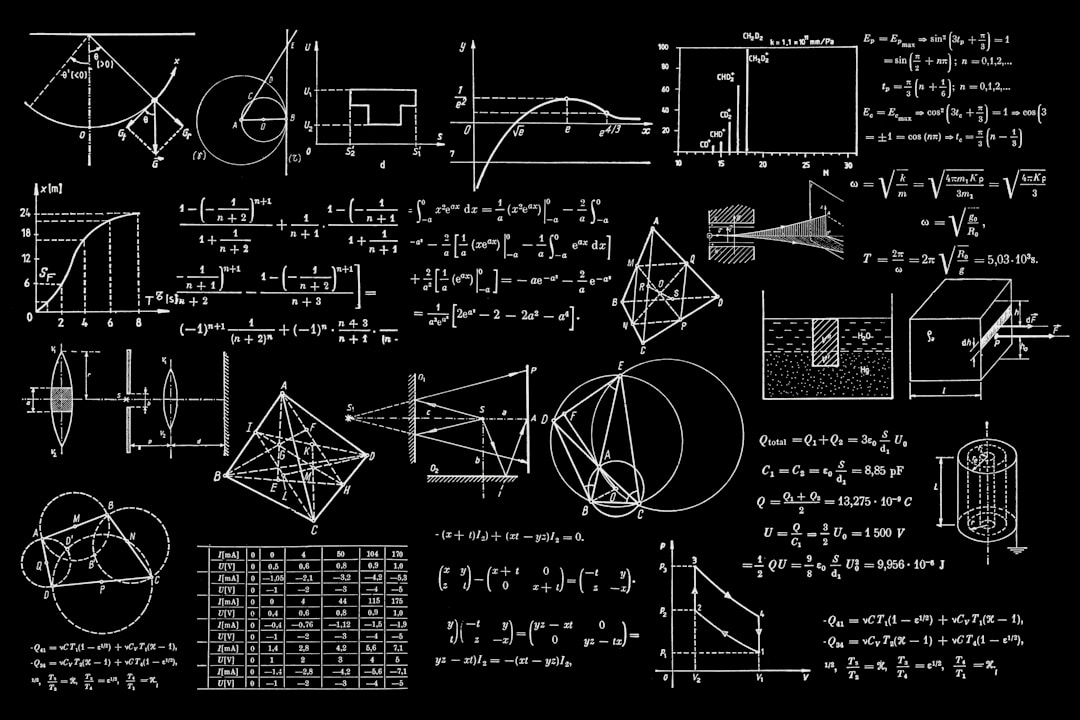

We often hear that AGI is around the corner. But a new benchmark released this week suggests otherwise. The CritPt test, designed by over 50 physicists, threw complex, unpublished research problems at the world's top AI models.

The Results? Failing Grades.

Even the brand-new Gemini 3 Pro and GPT-5 struggled to crack a 10% accuracy rate on these novel tasks. The test was designed to prevent 'memorization'—meaning the AI couldn't just look up the answer in its training data; it had to reason.

What This Means

This isn't a sign that AI is useless. It's a sign that AI is currently a Research Assistant, not a Lead Scientist. For developers and businesses, the takeaway is clear: use these tools to summarize and iterate, but do not trust them to solve net-new problems without deep human oversight.